GenAI in the Enterprise: It’s Getting Personal

An analysis of how employees are increasingly blending personal and professional boundaries in generative AI use

Executive Summary

Generative AI has moved from novelty to necessity in the modern enterprise. Harmonic Security’s Q3 2025 analysis of 3m prompts submitted to GenAI tools shows that adoption continues to rise, but so does the sensitivity of the data flowing through AI platforms.

The story this quarter is one of acceleration and personalization: employees are integrating AI into their daily work with little distinction between personal and professional boundaries.

Key findings for Q3 2025:

- 26.38% of all uploaded files submitted to GenAI tools contained sensitive information, up from 22% in Q2.

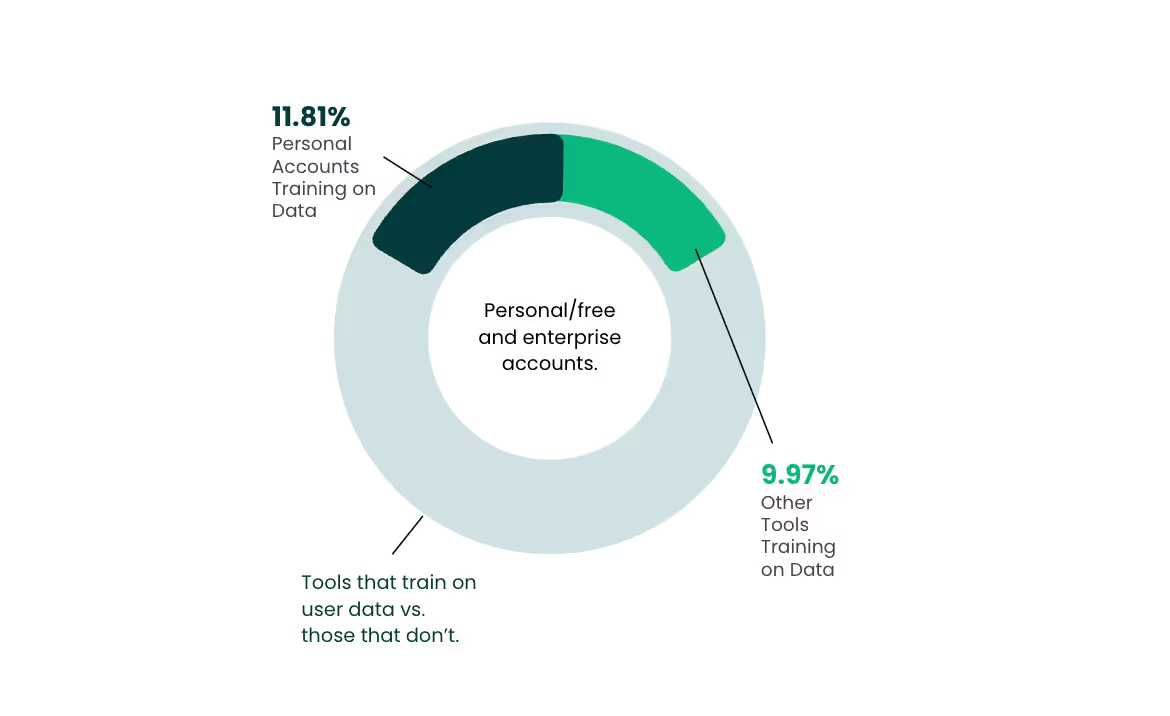

- 21.81% of sensitive data went into tools that train on user inputs - this includes free versions of ChatGPT, Gemini, Claude and Meta AI.

- 11.84% of exposures occurred through these free or personal AI accounts.

- The average organization has to grapple with the challenge of employees using a total of 27 distinct AI tools in Q3.

- Enterprises uploaded an average of 4.427 GB of data to GenAI platforms, up from 1.32 GB in Q2.

- 57.25% of sensitive uploads involved business and legal data, led by legal discourse, M&A documents, financial projections, and investment portfolios.

- Technical data exposures (particularly sensitive source code and access credentials) remain a persistent risk with 25% of the sensitive data exposures.

Enterprises are no longer simply experimenting with generative AI, they’ve seen the benefits and aren’t going back. This means the challenge has shifted from adoption to control: managing the flow of sensitive information through an ecosystem that blurs the line between company and individual.

Methodology

This report draws on anonymized enterprise data collected through Harmonic Protect, which tracks all GenAI browser use, between July and September 2025. The analysis includes over three million prompts and file uploads across 300 generative and AI-embedded tools, spanning organizations in the United States and the United Kingdom.

The data reflects real-world employee activity within security-conscious enterprises using Harmonic’s monitoring solutions. No personally identifiable information or proprietary file contents left customer environments. All data was aggregated and sanitized before analysis.

The study focuses on browser-based SaaS and GenAI tools. Native mobile and direct API interactions fall outside the dataset, meaning these results likely represent a conservative view of overall AI exposure.

The Changing Shape of AI Use

Q3 2025 marked a decisive phase in the evolution of workplace AI. What began as experimentation has become ingrained. Generative AI is now embedded directly in the interfaces where employees already work. Especially in the browser.

In July, Perplexity introduced Comet, a research-oriented AI browser. By September, Atlassian announced plans to acquire The Browser Company, creators of Arc and Dia, to bring AI-native browsing into the enterprise productivity stack. Opera closed the quarter with the release of Neon, an AI browser capable of executing local tasks.

Each launch reflected the same idea: that the web itself is becoming an intelligent workspace.

Meanwhile, research published by MIT in August confirmed what many organizations already suspected: employees are not waiting for corporate AI deployments. They are bringing their own tools. Harmonic’s data mirrors that finding: personal accounts, browser extensions, and free-tier AI services have become the default AI environment for millions of professionals.

This shift represents a fundamental inversion of enterprise IT. Rather than companies introducing AI to their employees, employees are introducing AI to their companies.

Findings

Overall Trends

The most dramatic shift in Q3 was in volume. Enterprises uploaded more than twice as much data to generative AI platforms as in the previous quarter, signaling that AI tools are now embedded in everyday workflows rather than confined to pilots or side projects.

The share of sensitive content rose from 22% to 26.38%, continuing a steady upward trend. Employees are clearly growing more confident in using AI for substantive, confidential work, such as financial modeling, contract analysis and code review.

Interestingly, the number of new GenAI tools introduced by employees fell from 23 in Q2 to 11 in Q3. This suggests that organizations are moving from discovery to consolidation. Rather than testing every new platform, users are deepening their reliance on a smaller set of tools.

Personal Accounts and Data Persistence

Personal accounts and free-tier tools accounted for 11.84% of all sensitive data exposures in Q3. While that may sound modest, the implications are profound. These accounts often retain history and context, meaning sensitive business information can persist in personal workspaces indefinitely.

When employees leave a company, they may still retain AI accounts containing enterprise data. Because that data resides in personal systems, it sits outside corporate control—and often within environments that train on user content.

The risk is no longer limited to files leaving the network; it extends to the memory of AI systems themselves.

Types of Data Exposed

Across all of the categories, the most significant type of data were business and legal documents. This includes legal documents, merger and acquisition data, and financial projections.

While PII and customer data were still significantly represented, the findings suggest that critical business data is more frequently exposed into GenAI tools. This likely points towards a gap in enterprise data security.

In the following sections, we’ll break down the sub-categories of data exposed into GenAI tools.

Personally Identifiable Information

Fifteen percent of sensitive data exposures were PII. Many of these exposures stemmed from administrative tasks involving HR or employee records. Many cases involved simple attempts to automate report generation or policy documentation. These are well-intentioned productivity shortcuts that inadvertently introduce privacy risk.

Exposure Type

- General PII (44.1%

- Employee PII (26.2%)

- Payroll Data (15.8%)

- Employment Records (8.6%))

- Bulk PII (3.3%)

- PHI (1.5%)

- Clinical Trials / EHR (0.5%)

Technical Data

As expected, developers remain among the most active AI users. With generic source code detections omitted, technical data comprised 25% of all sensitive data.

Proprietary source code made up two-thirds of all technical exposures, followed by credentials and security documentation. The data indicates a continued pattern of engineers pasting internal code snippets into GenAI tools for debugging or optimization.

Exposure Type

- Proprietary Source Code (65.0%)

- Access Keys / Credentials (24.0%)

- Security Incident Reports (11.0%)

* This analysis omitted counts of generic “code”

Business and Legal Data

Business and legal content continues to dominate the sensitive-data mix with 57.25% of all uploads containing such information. Legal drafts, deal documents, and financial projections accounted for most exposures, underscoring how deeply AI has entered strategic and advisory workflows.

While some of these uploads occurred in enterprise-licensed environments, a significant portion were traced to consumer AI platforms that train on user content.

Exposure Type

- Legal Discourse (35.0%)

- Mergers & Acquisitions (19.0%)

- Financial Projections (16.0%)

- Investment Portfolios (14.0%)

- Dispute Resolutions (7.0%)

- Sales and Pipeline Data (6.0%)

- Credit and Insurance (3.0%)

Customer Data

Customer data exposures were smaller in volume (3%) but remain among the most critical. These incidents typically originated from sales or support personnel using GenAI tools for drafting or translation tasks that involved client information.

Exposure Type

- Credit Card and Billing Data (58.0%)

- Customer Profiles (20.0%)

- Authentication Data (15.0%)

- Payment Transactions (7.0%)

Governance and the Policy Gap

Most enterprises have now published formal AI usage policies, but policy alone has not changed outcomes. Awareness is high; enforcement is low.

Harmonic’s data shows that while organizations are educating employees about acceptable AI use, sensitive data exposure continues to rise. The reason is structural: human awareness cannot scale as quickly as AI adoption.

Enterprises that have implemented technical governance report not only fewer exposures but greater productivity gains. Effective governance is not merely defensive; it enables safe, high-value use of sensitive data in AI workflows.

“Organizations that invest in third-party AI governance products are almost two times more likely to report higher levels of value from their AI tools, highlighting a clear link between effective and enforceable governance and the value that organizations get from their AI investments.” - Gartner, How to Mature Generative and Agentic AI Governance for Enterprise Applications

For the majority of companies, this remains a work in progress. To close the gap, governance must evolve from written guidance to real controls.

Implications

The Q3 data illustrates how deeply AI has penetrated the enterprise. Three realities now define the landscape:

- Shadow AI has gone mainstream. Employees freely use AI tools without formal approval, often through personal accounts.

- Business data stays with AI tools. Once information is entered into AI systems, it can remain accessible long after it leaves corporate boundaries.

- Regulatory and reputational pressures are intensifying. As privacy frameworks and AI governance standards mature, enterprises will be expected to prove not only that data is protected but that AI use is accountable and transparent.

Vendor AI transparency is still lacking in many cases, with disclosures about training practices, data retention, and opt-out mechanisms often opaque or missing. Without consistent standards, organizations must assume responsibility for enforcing their own boundaries.

Recommendations

Enterprises should treat AI governance as an operational control layer, but one that enables productivity rather than restricts it.

Embed controls into daily workflows.

Move beyond policy-based instruction to technical enforcement. Implement systems that automatically identify and prevent sensitive data uploads to unsanctioned AI platforms.

Monitor personal account activity.

Track authentication and browser behavior to detect when employees use personal or free AI accounts for work. Offer sanctioned alternatives that deliver comparable utility.

Institutionalize data rights.

Integrate opt-out and deletion capabilities directly into governance processes. Providers such as OpenAI’s Privacy Portal allow users to control how their content is used for model training or to request data removal. Enterprises should standardize these controls across teams.

Build stronger policy foundations.

Tools like AI Policy Studio can help organizations generate and align policies across departments, offering a practical framework while technical enforcement capabilities mature.

Measure exposure, not just adoption.

Benchmark sensitive-data interactions quarterly to track progress and guide leadership reporting. Quantitative oversight will increasingly be required for compliance and risk audits.

Shift enforcement to the browser layer.

As AI-native browsers proliferate, governance must occur where work happens. Browser-level controls allow organizations to apply policy at the point of data generation, not retroactively.

Reframe governance as a productivity strategy.

Organizations that can safely use sensitive data in AI contexts will unlock a sustained competitive advantage.